In this tutorial, we explore how to build a sophisticated AI agent capable of fetching and processing information from the internet using the LangChain library integrated with OpenAI’s GPT-4 and Tavily’s API. This setup enables the AI agent to access a vast range of data, understand, and interact with it in a meaningful way.

Setup and Initial Configuration

Importing Libraries and Loading Environment Variables

First, import the necessary Python libraries that will enable handling of environment variables, API interactions, and text processing. The LangChain toolkit offers comprehensive modules for handling various aspects of AI model interactions and data processing. Here, we also import the dotenv library to securely manage sensitive information like API keys.

from langchain_openai import ChatOpenAI

from dotenv import load_dotenv

import os

from langchain_community.document_loaders import WebBaseLoader

from langchain_openai import OpenAIEmbeddings

from langchain_community.vectorstores.faiss import FAISS

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain.tools.retriever import create_retriever_tool

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain import hub

from langchain.agents import create_openai_functions_agent

from langchain.agents import AgentExecutor

load_dotenv() # Loads the environment variables from an .env fileSpecifying the Data Source and Model

Define the URL to fetch the data and set up the GPT-4 model using an API key stored in the environment variables. This approach ensures that your API keys are never hard-coded into your scripts, enhancing security.

url = "https://www.ibm.com/topics/artificial-intelligence"

model = ChatOpenAI(model="gpt-4", api_key=os.getenv("OPENAI_API_KEY"))Data Acquisition and Processing

Fetching and Splitting Documents

Use WebBaseLoader from LangChain's community tools to load documents directly from the specified URL. This tool simplifies the process of web scraping and document loading. Once the documents are fetched, they are split into smaller, more manageable pieces using RecursiveCharacterTextSplitter. This is crucial for processing large documents and improving the handling of data.

docs = WebBaseLoader(url).load()

text_splitter = RecursiveCharacterTextSplitter()

documents = text_splitter.split_documents(docs)Creating Embeddings and Vector Storage

With the documents split, the next step involves converting the textual data into embeddings using OpenAIEmbeddings. These embeddings are then stored in a FAISS vector store, which facilitates efficient similarity search and retrieval of data. FAISS is particularly useful for handling large volumes of data due to its optimized algorithms for nearest neighbor search.

Building Retrieval Tools

Setting Up Retriever and Search Tools

Transform the embeddings into a retrievable format using LangChain’s tools to create a functional retriever. This retriever can quickly find the most relevant document sections based on incoming queries. Additionally, integrate Tavily’s API through TavilySearchResults to add robust external search capabilities, allowing the agent to fetch and comprehend external data dynamically.

Launching the AI Agent

Creating and Executing the Agent

Use LangChain’s create_openai_functions_agent to assemble the AI agent with a pre-defined behavior template pulled from the LangChain hub. This agent combines the model, tools, and instructions into a coherent unit capable of handling complex queries. Finally, an AgentExecutor is created to execute the agent's actions, effectively making the agent responsive to user queries.

This comprehensive setup demonstrates building a powerful, internet-connected AI agent that can intelligently process, retrieve, and interact with information sourced from across the web. This can be particularly useful in fields like research, customer support, and any area where quick, accurate information retrieval is valuable.

Full Code

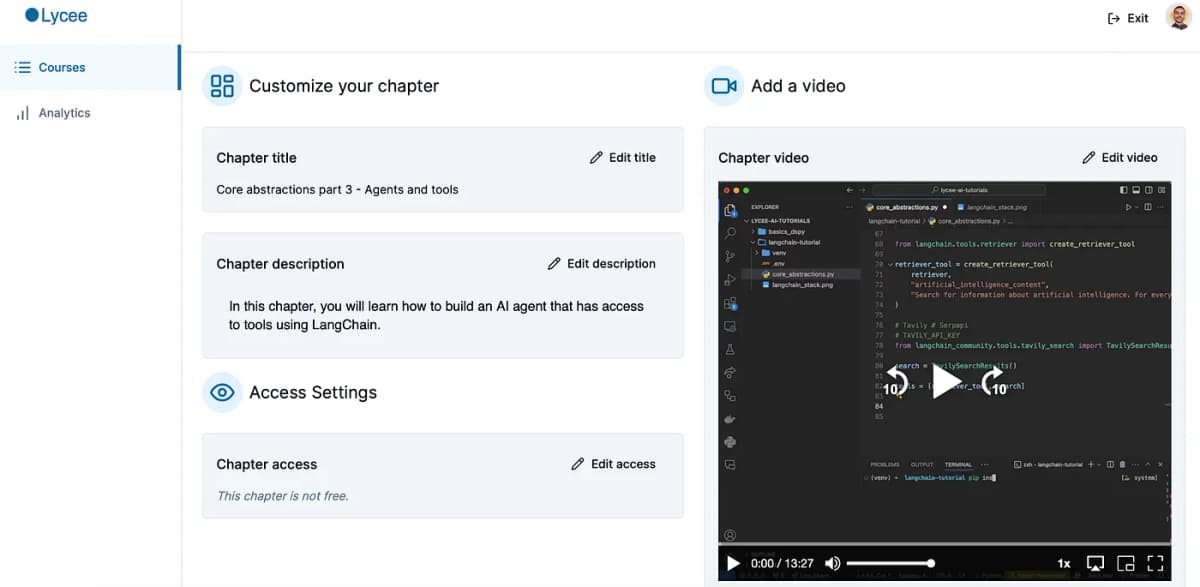

For those interested in exploring this project further or implementing it themselves, full access to the source code is available. To gain access, simply register to the LangChain module on Lycee.ai.

Here is the link to the module: LangChain Module

By signing up, you can explore this and other projects, enhancing your understanding of how to build complex AI systems.

This comprehensive setup demonstrates building a powerful, internet-connected AI agent that can intelligently process, retrieve, and interact with information sourced from across the web. This can be particularly useful in fields like research, customer support, and any area where quick, accurate information retrieval is valuable.